Artificial General Intelligence (AGI) refers to a type of artificial intelligence that has the ability to understand, learn, and apply its intelligence to a wide variety of problems, much like a human being. Unlike narrow or weak AI, which is designed and trained for specific tasks (like language translation, playing a game, or image recognition), AGI can theoretically perform any intellectual task that a human being can. It involves the capability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly, and learn from experience.

Resolves as YES if such a system is created and publicly announced before January 1st 2026

MOD EDIT (Nov 2, 2025 - @Stralor)

This market uses /dreev/in-what-year-will-we-have-agi as its baseline. If that resolves NO for this market's year (and every year earlier), this also resolves NO. If it resolves YES, we dig into the nitty gritty.

Please see THIS COMMENT from the 2026 market for more guidance about what a YES resolution might look like.

Here are markets with the same criteria:

/RemNiFHfMN/did-agi-emerge-in-2023

/RemNiFHfMN/will-we-get-agi-before-2025

/RemNiFHfMN/will-we-get-agi-before-2026-3d9bfaa96a61 (this question)

/RemNiFHfMN/will-we-get-agi-before-2027-d7b5f2b00ace

/RemNiFHfMN/will-we-get-agi-before-2028-ff560f9e9346

/RemNiFHfMN/will-we-get-agi-before-2029-ef1c187271ed

/RemNiFHfMN/will-we-get-agi-before-2030

/RemNiFHfMN/will-we-get-agi-before-2031

/RemNiFHfMN/will-we-get-agi-before-2032

/RemNiFHfMN/will-we-get-agi-before-2033

/RemNiFHfMN/will-we-get-agi-before-2034

/RemNiFHfMN/will-we-get-agi-before-2033-34ec8e1d00fd

/RemNiFHfMN/will-we-get-agi-before-2036

/RemNiFHfMN/will-we-get-agi-before-2037

/RemNiFHfMN/will-we-get-agi-before-2038

/RemNiFHfMN/will-we-get-agi-before-2039

/RemNiFHfMN/will-we-get-agi-before-2040

/RemNiFHfMN/will-we-get-agi-before-2041

/RemNiFHfMN/will-we-get-agi-before-2042

/RemNiFHfMN/will-we-get-agi-before-2043

/RemNiFHfMN/will-we-get-agi-before-2044

/RemNi/will-we-get-agi-before-2045

/RemNi/will-we-get-agi-before-2046

/RemNi/will-we-get-agi-before-2047

/RemNi/will-we-get-agi-before-2048

Related markets:

/RemNi/will-we-get-asi-before-2027

/RemNi/will-we-get-asi-before-2028

/RemNiFHfMN/will-we-get-asi-before-2029

/RemNiFHfMN/will-we-get-asi-before-2030

/RemNiFHfMN/will-we-get-asi-before-2031

/RemNiFHfMN/will-we-get-asi-before-2032

/RemNiFHfMN/will-we-get-asi-before-2033

/RemNi/will-we-get-asi-before-2034

/RemNi/will-we-get-asi-before-2035

Other questions for 2026:

/RemNi/will-there-be-a-crewed-mission-to-l-0e0a12a57167

/RemNi/will-we-get-room-temperature-superc-ebfceb8eefc5

/RemNi/will-we-discover-alien-life-before-cbfe304a2ed7

/RemNi/will-a-significant-ai-generated-mem-1760ddcaf500

/RemNi/will-we-get-fusion-reactors-before-a380452919f1

/RemNi/will-we-get-a-cure-for-cancer-befor-e2cd2abbbed6

Other reference points for AGI:

/RemNi/will-we-get-agi-before-vladimir-put

/RemNi/will-we-get-agi-before-xi-jinping-s

/RemNi/will-we-get-agi-before-a-human-vent

/RemNi/will-we-get-agi-before-a-human-vent-549ed4a31a05

/RemNi/will-we-get-agi-before-we-get-room

/RemNi/will-we-get-agi-before-we-discover

Heyo @traders, I'm stepping in to make an executive decision here, just so you can all move forward after this has been stuck in the mod queue so long. I've dug into a fair bit of the convo here and in the thread on discord. (Thanks for recapping for me, Daniel!)

I hear the desire to craft this into the perfect market, the desire to mirror another market and keep it simple for arbitrage, and the desire to not mirror it due to this one's unique wording.

Here's my thinking:

It's best to not just N/A these markets and be done with it. y'all have invested for the long haul and it would be a shame to steal that away

Perfect mirroring defeats a lot of the purpose here, arbitrage be damned. @dreev 's market is good, but it exists already, and it might be missing some je ne sais quoi that this one has

We don't have to craft this one into the ideal market. We can set imperfect criteria and let it succeed or fail on its own merits. Other markets (like Daniel's) are much easier to adapt due to active creators

I might have chosen different criteria and definitions, but I like to moderate based on the criteria we get from the creator while listening to traders' expectations and understanding of those criteria

Whatever we leave behind here needs to be simple enough for traders to act on routinely and for whichever mod comes along to resolve down the line to have some guidance

I like what @CamillePerrin said:

Because there no clear criteria here, I assume this will resolve Yes only when it's in incontrovertly clear there is AGI to the non-rat-adjacent man-in-the-street, which im also fine with.

So, here's what I want to do:

this market series will use Daniel's market (/dreev/in-what-year-will-we-have-agi) as the baseline. if a year resolves NO there, then it resolves NO in here...

...and if or when a year resolves YES there, we start to assess these markets on their special criteria

if Daniel's falls into disrepair for whatever reason or we run past its end date, a similar strength market will be used as the baseline

Ultimately I'm not necessarily the one who will make the final resolution decision here once it gets interesting, and so whoever does will have to work with the traders to really narrow down an answer. Now we don't have satisfactory tests for this, and it's clear from the market description and the sentiments of the traders here that no test is yet a reliable measure on what this market is measuring, but maybe there will be better tools to answer these questions in the future! Until then, here are my suggestions:

!! For this market to resolve YES, we want to see that it's clear to the average layperson that a true human-level artificial intelligence exists. !!

Things to consider:

A big AI company saying "We have AGI!" is definitely insufficient. Nor can we trust your rat friend, hacker sister, or techno-spiritualist uncle. Likewise, any soundbites from even an otherwise trusty news outlet that really just repeat what those people said is also out the window.

"Learn quickly, and learn from experience" is something many of you have highlighted as both important but imprecise, and it's what makes you value this particular market so highly. My read on that: what matters isn't that an AGI is suited to a given task, but that it's dynamic and complex, and able to make (potentially novice) mistakes and then rarely if ever repeat them. Therefore, we'd like it to continue actions and thinking in the background, not only when prompted; learning and planning necessitate this degree of autonomy. And true learning from experience means ideally it should have Object Permanence, in all the ways that matter as and to a human, including with:

conversation and interpersonal dynamics

task memory and plan follow through

its own personality - not developed by directed human prompting or design but rather as an artifact of its own learning and socialization

consciousness, in the limited sense of understanding that it and/or its environment exists and being able to frame its learnings and actions around that concept (sapience rather than simply sentience)

AGI probably needs to become a new accepted part of the world that people talk about casually, like they did in the 90s about the internet as it became a mainstream thing people got access to. Keeping to the internet analogy: that means that conversations on the news or over the family dinner table aren't debates about whether or not it "actually lets computers around the world talk to each other" but instead people by-and-large assume that's true and talk about how it's cool that we're able to send each other email or post text animations on our own geocities pages.

---

I encourage you to bet with care and present strong evidence if that time comes - use the Reasonable Person Doctrine - and accept that it might be me or some other mod who has to make a gut call.

Remember, as Daniel said, this market has no one to answer clarifying questions in the long run. Trade with the understanding of that risk.

Heyo @traders, I'm stepping in to make an executive decision here, just so you can all move forward after this has been stuck in the mod queue so long. I've dug into a fair bit of the convo here and in the thread on discord. (Thanks for recapping for me, Daniel!)

I hear the desire to craft this into the perfect market, the desire to mirror another market and keep it simple for arbitrage, and the desire to not mirror it due to this one's unique wording.

Here's my thinking:

It's best to not just N/A these markets and be done with it. y'all have invested for the long haul and it would be a shame to steal that away

Perfect mirroring defeats a lot of the purpose here, arbitrage be damned. @dreev 's market is good, but it exists already, and it might be missing some je ne sais quoi that this one has

We don't have to craft this one into the ideal market. We can set imperfect criteria and let it succeed or fail on its own merits. Other markets (like Daniel's) are much easier to adapt due to active creators

I might have chosen different criteria and definitions, but I like to moderate based on the criteria we get from the creator while listening to traders' expectations and understanding of those criteria

Whatever we leave behind here needs to be simple enough for traders to act on routinely and for whichever mod comes along to resolve down the line to have some guidance

I like what @CamillePerrin said:

Because there no clear criteria here, I assume this will resolve Yes only when it's in incontrovertly clear there is AGI to the non-rat-adjacent man-in-the-street, which im also fine with.

So, here's what I want to do:

this market series will use Daniel's market (/dreev/in-what-year-will-we-have-agi) as the baseline. if a year resolves NO there, then it resolves NO in here...

...and if or when a year resolves YES there, we start to assess these markets on their special criteria

if Daniel's falls into disrepair for whatever reason or we run past its end date, a similar strength market will be used as the baseline

Ultimately I'm not necessarily the one who will make the final resolution decision here once it gets interesting, and so whoever does will have to work with the traders to really narrow down an answer. Now we don't have satisfactory tests for this, and it's clear from the market description and the sentiments of the traders here that no test is yet a reliable measure on what this market is measuring, but maybe there will be better tools to answer these questions in the future! Until then, here are my suggestions:

!! For this market to resolve YES, we want to see that it's clear to the average layperson that a true human-level artificial intelligence exists. !!

Things to consider:

A big AI company saying "We have AGI!" is definitely insufficient. Nor can we trust your rat friend, hacker sister, or techno-spiritualist uncle. Likewise, any soundbites from even an otherwise trusty news outlet that really just repeat what those people said is also out the window.

"Learn quickly, and learn from experience" is something many of you have highlighted as both important but imprecise, and it's what makes you value this particular market so highly. My read on that: what matters isn't that an AGI is suited to a given task, but that it's dynamic and complex, and able to make (potentially novice) mistakes and then rarely if ever repeat them. Therefore, we'd like it to continue actions and thinking in the background, not only when prompted; learning and planning necessitate this degree of autonomy. And true learning from experience means ideally it should have Object Permanence, in all the ways that matter as and to a human, including with:

conversation and interpersonal dynamics

task memory and plan follow through

its own personality - not developed by directed human prompting or design but rather as an artifact of its own learning and socialization

consciousness, in the limited sense of understanding that it and/or its environment exists and being able to frame its learnings and actions around that concept (sapience rather than simply sentience)

AGI probably needs to become a new accepted part of the world that people talk about casually, like they did in the 90s about the internet as it became a mainstream thing people got access to. Keeping to the internet analogy: that means that conversations on the news or over the family dinner table aren't debates about whether or not it "actually lets computers around the world talk to each other" but instead people by-and-large assume that's true and talk about how it's cool that we're able to send each other email or post text animations on our own geocities pages.

---

I encourage you to bet with care and present strong evidence if that time comes - use the Reasonable Person Doctrine - and accept that it might be me or some other mod who has to make a gut call.

Remember, as Daniel said, this market has no one to answer clarifying questions in the long run. Trade with the understanding of that risk.

@Stralor “consciousness, in the sense of understanding that it and/or its environment exists and being able to frame its learnings and actions around that concept (sapience rather than simply sentience”

Just to be clear, if an AI system meets all the criteria regarding intelligence, learning, situational awareness, etc., it doesn’t also need to be conscious as in experience subjective sensations as in have qualia, right? I don’t think anyone was ever betting on whether AI/AGI will be conscious in addition to learning, adapting, reasoning, planning, etc. I certainly wasn’t.

@Stralor I've added a disclaimer to the description in this market and every market through 2034, where /dreev/in-what-year-will-we-have-agi runs out and we'll need to replace it with another baseline, assuming these markets survive that long.

@Stralor I think by some point in 2027 there will have been a breakthrough in continual learning to make the "learn quickly and learn from experience" aspect more generally perceived as a requirement for AGI systems. Right now we're trying to abstractly theorize about these algorithms without having seen this breakthrough. It will be much more obvious in hindsight what the implications are and how systems that can and cannot learn from experience are distinct (similar to reasoning/inference-time scaling with LLMs compared to basic LLMs).

Coupled with the "moonshot fully automated AI researcher" project OpenAI has on their OKRs for 2028, I think there will be a strong case to resolve these questions YES within the next few years.

@DavidHiggs Good question! I think we're diving into the philosophy of consciousness at that point. I would argue that learning necessitates having a frame of reference to base that learning off of. Indeed, if you could prove it has qualia, I think it'd be a great step to resolving this market. But, no, I'm not suggesting that an AGI must formally have them for this to resolve.

To not get too philosophical, for the purposes of this market I suggest that consciousness is about awareness (as a prerequisite for active learning) rather than about proven experiential sensations. I'm not asking anyone to prove the existence of a soul.

Nor should my guidelines be taken as hard and fast rules. I'm providing context that we can use to make a more informed decision, but I have no infallible test.

@Stralor ok, I think we’re on the same page then. I would say terms like (situational) awareness, or contextual understanding are probably more precise without implying (as you say) getting into the philosophical weeds.

Although even then, what if it somehow has continual learning, reasoning, planning, adaptability, agentic action and generally seems smarter than humans but without this sort of (not necessarily conscious) self-awareness? Seems like a possibly redundant or overly strict criterion if we can just talk about learning and planning independently.

@DavidHiggs I think this market refers only to things that are measurable, in humans or in machines. Discussing phenomena that cannot be measured is outside of the context of this market.

@DavidHiggs Great! If it's pretty well demonstrated that it has all of those qualities and you don't need my guidelines to help figure it out then I'll be relieved that my guidelines won't be super necessary! I'd bet they're more required than you think, but that's my take and not a deal-breaker.

I'm gathering my notes from the comments here, and discussions with other mods privately, on the resolution criteria for all of these markets created by the deleted user RemNi.

First, note my own conflict of interest: I'm betting heavily on NO for AGI in the next few years in these markets. At this point the definition we use for AGI doesn't matter too much to my betting but I expect the Drop-In Remote Worker definition to be harder to satisfy than the Extreme Turing Test version.

My ironic bias is to go with the latter, so I can't be accused of bias! My sense from the comments is that most traders wouldn't like that. Many seem to have specifically bet NO because it sounded like the creator wouldn't resolve YES based on any Turing test.

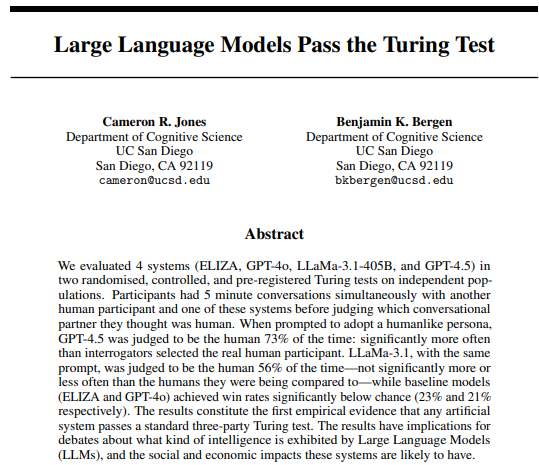

(One thing we seem to have universal agreement on is that passing a long, informed, adversarial Turing test is a necessary if not sufficient condition for these markets to resolve YES.)

But the Turing test version is also trying hard to test actual AGI. Being grilled for hours by an expert in AI, with the human foils being STEM PhDs -- that's not totally dissimilar from the drop-in remote worker version.

The other big advantage of the Extreme Turing Test definition is how focal it is. It's what the source market for the big countdown timer at manifold.markets/ai references. Though that market currently ends with this caveat:

Note: These criteria are still in draft form, and may be updated to better match the spirit of the question. Your feedback is welcome in the comments.

So it's not too late for the so-called Turing test version to more closely match what traders here have had in mind.

Not to say that any of that should override creator intent or traders' interpretation thereof in these markets. But without the creator, we do want to pick another market for these to mirror. That's important since traders need clarity and a way to ask clarifying questions. This market has no one to answer such questions.

In case this is helpful, I hereby solemnly aver that my own AGI market is my honest attempt at pinning down the best definition of AGI for betting purposes, avoiding technicalities with Turing tests, and that I believe also matches the spirit of these RemNi AGI markets.

PS: I think ASI is easier. ASI = incomprehensible machine gods. We'll know this when we see it. Either humans all die or we cure death and whatnot. The first time an AGI market resolves YES we can worry about the ASI ones.

@dreev the definition used for ASI in these markets is very clearly not "incomprehensible machine gods"

@dreev the definition you're using in your own AGI market is missing a few of the elements contained in this one's description. The <drop in remote worker> is insufficient since it doesn't say anything about things like continual learning etc. The criteria of this market is stronger since it requires the system to be able to <learn quickly> and <learn from experience>.

@dreev it's clear that your definition of AGI/ASI does not match the definition/spirit of these markets.

@Dulaman while you are technically correct about potential differences in criteria, continual learning looks to be one of, if not the only key limitation currently preventing AI systems scaling right up to drop-in remote worker capabilities.

I think creating any kind of true drop-in remote worker is somewhat harder than just solving continuous learning, and also theoretically doable without a new learning in real time breakthrough, but mostly expect learning to precede by a year or two.

@DavidHiggs It depends on the definition of <drop in remote worker>. It's certainly possible to have a <drop in remote worker> on a code repository that can replace a human programmer without having the ability to learn rapidly and learn from experience. In that case the system would not be AGI since it would not have continual learning as per this question's criteria. This is why <drop in remote worker> is a weaker requirement than <AGI>.

@Dulaman well yes, for some applications like a coding agent that might be possible. But generally when people talk about drop in remote workers, they’re talking about a general AI system/set of systems that can do over 50/90/99% of all remote jobs. That will very likely require continuous learning.

@DavidHiggs sure, but that's not what @dreev is referring to. He's referring to Leopold's Aschenbrenner's definition of <drop in remote worker>, that is clearly much weaker than this question's criteria:

@DavidHiggs the point is that attempting to co-opt this question to point it towards a much weaker definition is a mistake. This question's criteria is well thought out, given that it was written in 2023.

@Dulaman ah, I actually never read Situational Awareness, I guess I was assuming things about it based on how the dialogue around AGI timelines has evolved since then.

At any rate, going with the more robust >x% of economically valuable remote tasks/jobs over the SA remote worker criteria explicitly should still work? Or would you think that is still watered down compared to this question’s original criteria?

@DavidHiggs I think percent of economically valuable remote tasks/jobs is a better proxy for sure. But then the question turns into one of logistics and implementation instead of capabilities. So it's an imperfect proxy.

@Dulaman well, it can be the capability for percent of economic tasks rather than the actual implementation/market penetration. Although that’s harder to judge

@DavidHiggs definitely would be a good starting point if someone wants to write a new question / set of questions

@dreev I'm amused by

The other big advantage of the Extreme Turing Test definition is how focal it is. It's what the source market for the big countdown timer at manifold.markets/ai references. Though that market currently ends with this caveat:

Note: These criteria are still in draft form, and may be updated to better match the spirit of the question. Your feedback is welcome in the comments.

yours also has a similar disclaimer!

Caveat aleator: the definition of AGI for this market is a work in progress -- ask clarifying questions!

https://arxiv.org/pdf/2503.23674

Participants picked GPT-4.5, prompted to act human, as the real person 73% of time, well above chance. Only GPT4.5 passed the test.